Emissions from Artificial Intelligence (AI) use

So far in this series, we have focused on the impacts of training AI models, as this is reflective of where most of the literature has been focused to date. However, it is important to consider the use of AI.

“30-60% of emissions from AI could be attributed to inference.”

Single instances of AI use - known as ‘inferences’ - may individually have far smaller costs than training the model, but over time this can add up to be more than the initial training costs. Some studies (Patterson, Wu) estimate that 30-65% of emissions from AI could be attributed to inference, which is likely to rise as AI is more widely used.

Figure 3: Tasks examined in Luccioni’s study, and the emissions they produce per 1,000 queries. The y-axis is logarithmic.

“Image generation created far higher emissions than any other task.”

So when it comes to environmental costs, are all inferences alike? To answer this question, Luccioni has taken a deep dive into AI inference, examining the differences in energy use between different tasks and between AI models, comparing both ‘task specific’ and ‘general purpose’. Their findings summarised in Fig. 2 illustrate that different AI tasks use significantly different resources.

Two main takeaways are that generation tasks - i.e. having the AI create something, such as images or text - cause significantly more emissions than classification-based tasks, and image-related tasks cause considerably more emissions than non-image based tasks. Image generation created far higher emissions than any other task studied. There is already significant debate around AI art and its ramifications for artists and copyright, and we can see above that its carbon footprint is another cause for concern. When it comes to AI’s impact on the environment, it seems a picture really is worth a thousand words… if not more.

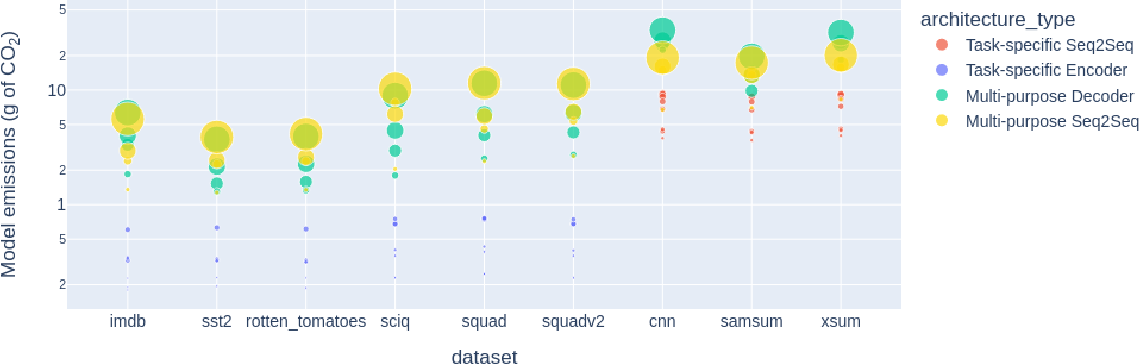

Figure 4: Mean model emissions for classification tasks for different datasets, showing the comparison between task-specific models and multi-purpose architectures. The y axis is in logarithmic scale, dot size is proportional to model size.

The study also examined the differences between task-specific and general-purpose models, finding that the emissions from general-purpose AI are an order of magnitude higher than for task-specific AI when running for classification tasks. They find that for generative tasks there is a similar though smaller difference in emissions, with general-purpose AI generating 3 to 5 times more emissions than task-specific AI.

These findings would suggest that focusing on developing task-specific rather than general-purpose AI would be beneficial in reducing overall emissions from AI use. However, it may not be quite so simple. Other research has suggested that large models which don’t need to be retrained for different tasks - thus saving on the retraining costs - could help reduce emissions. More research into the tradeoffs between training, retraining and inference costs could be invaluable in finding the most energy-efficient solution.

Of course, the use of AI wouldn’t be possible without hardware: servers, processing units and devices such as Alexa. Manufacturing this hardware typically requires rare earth metals obtained in labour-intensive mining processes which can have significant impacts on the local environment. Crawford and Joler indicate that the mining of minerals and metals for AI can cause loss of biodiversity, contamination of soil and water, deforestation, erosion and high concentration of polluting substances.

We also see the intersection of environmental and sociopolitical issues, with resource extraction having knock-on impacts such as low-paid, dangerous labour and negative health impacts on the local population, often in poorer parts of the world.

It is, however, incredibly difficult to estimate the true impact of specific AI programs and devices due to the layers of supply chains and systems between the extracted resources and the finished product.

In the next article, we’ll explore that complexity and lack of transparency, as well as examine how AI can be used to directly help or harm the environment, and how renewables fit into this equation: The challenges and applications of AI (and the long road to renewables)

This article is part of the Artificial Footprints Series, taken from our report by Owain Jones:

Tags: